bagging in machine learning geeksforgeeks

So we can Drop it. A Computer Science portal for geeks.

Bagging Ensemble Meta Algorithm For Reducing Variance By Ashish Patel Ml Research Lab Medium

November 22 2021 machine 0 Comments.

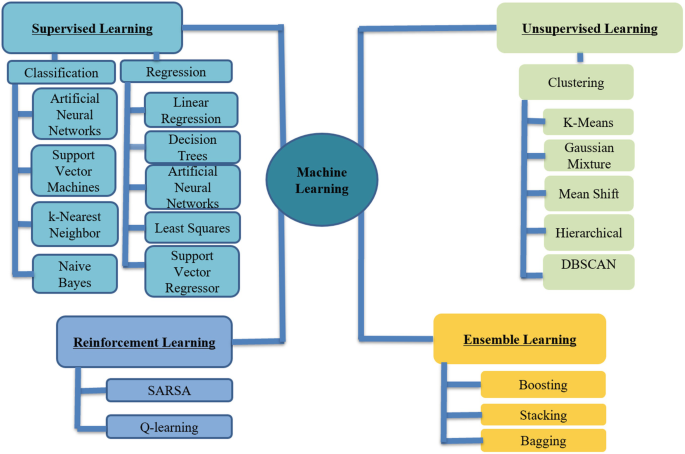

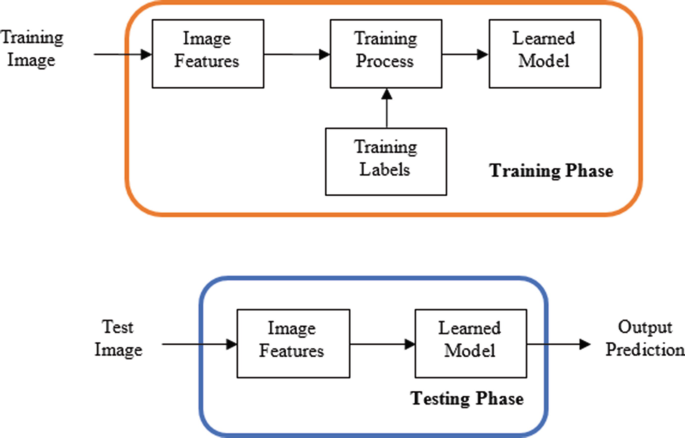

. Machine Learning is the field of study that gives computers the capability to learn without being explicitly programmed. Stacking is a way to ensemble multiple classifications or regression model. It contains well written well thought and well explained computer science and programming articles quizzes and practicecompetitive.

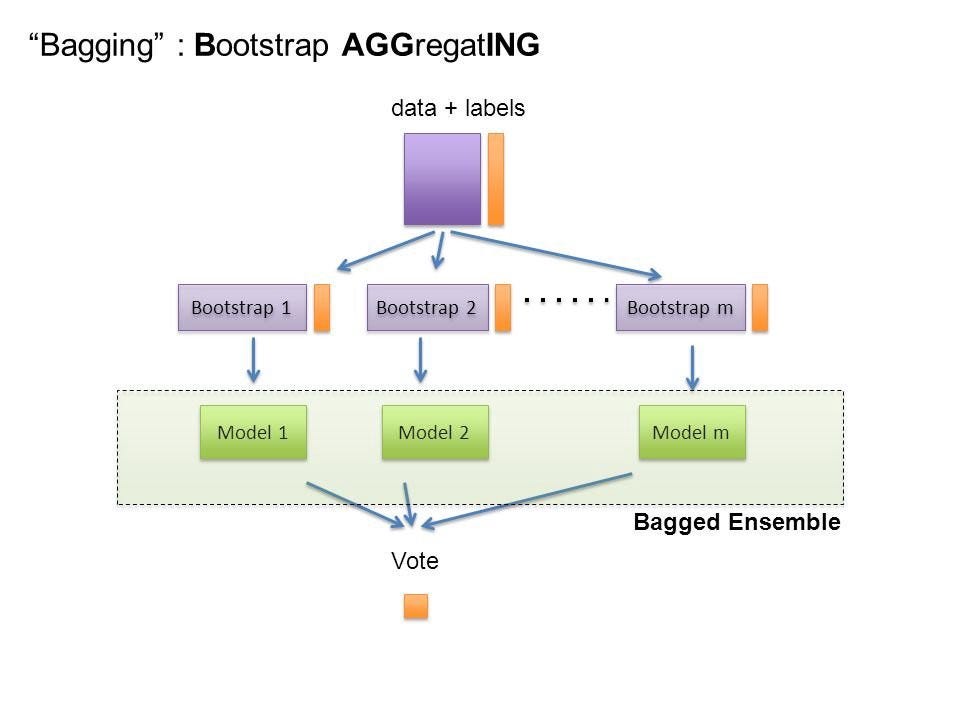

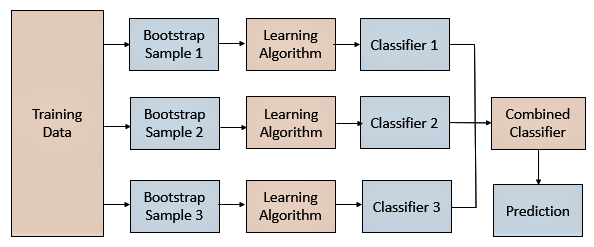

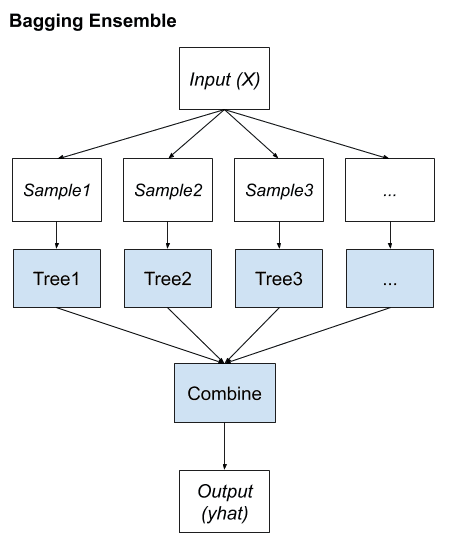

Nov 22 2021 Bagging In Machine Learning Geeksforgeeks. In bagging a random sample. However bagging uses the following method.

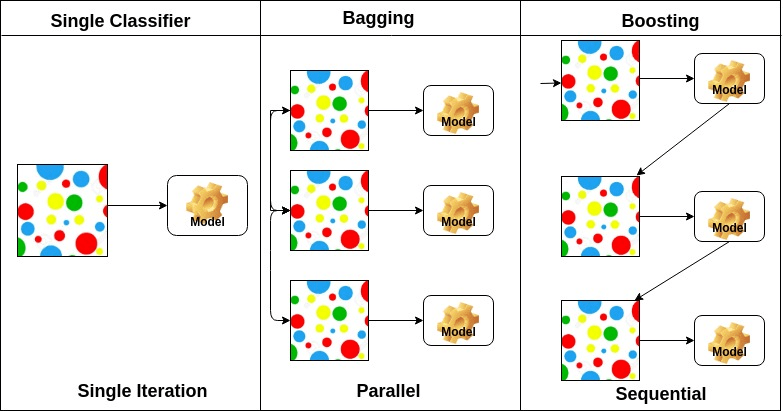

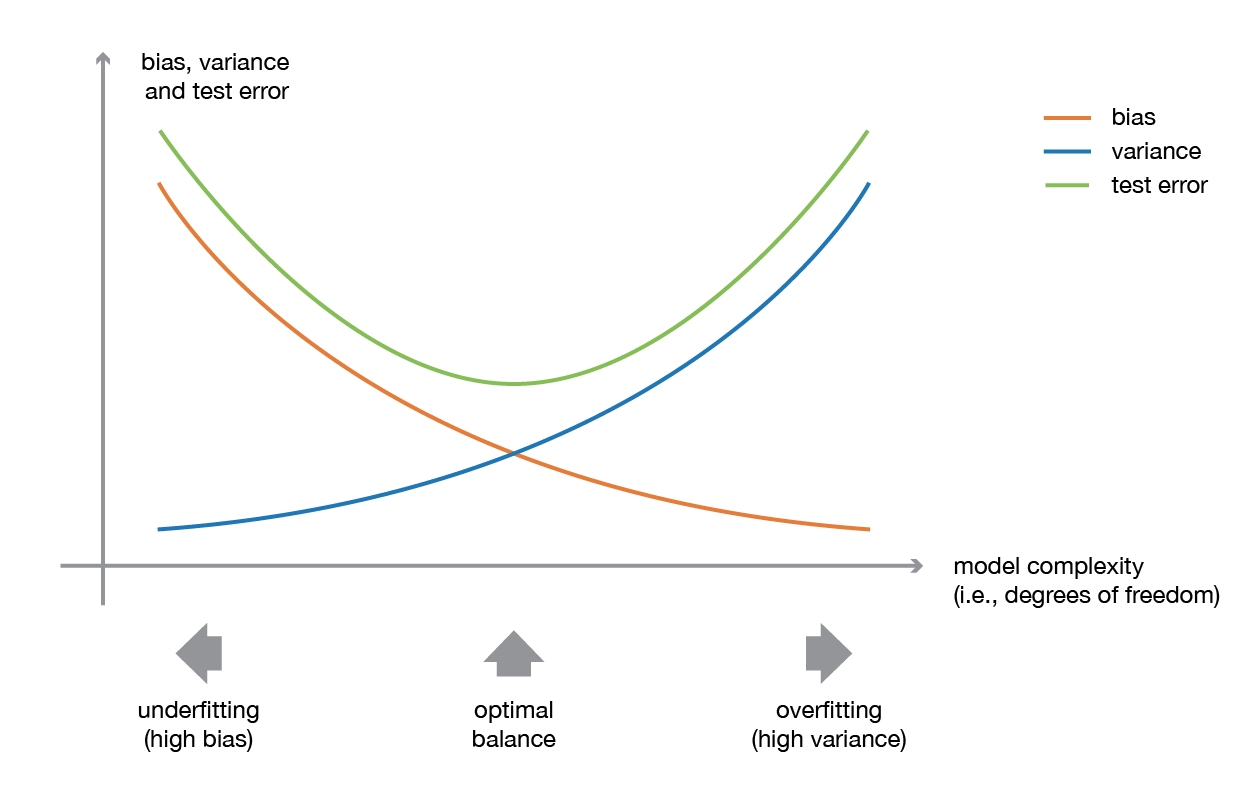

Boosting is an ensemble modeling technique that attempts to build a strong classifier from the number of weak. Stacking in Machine Learning. Bagging Vs Boosting In Machine Learning Geeksforgeeks Ensemble machine learning can be mainly categorized into bagging and boosting.

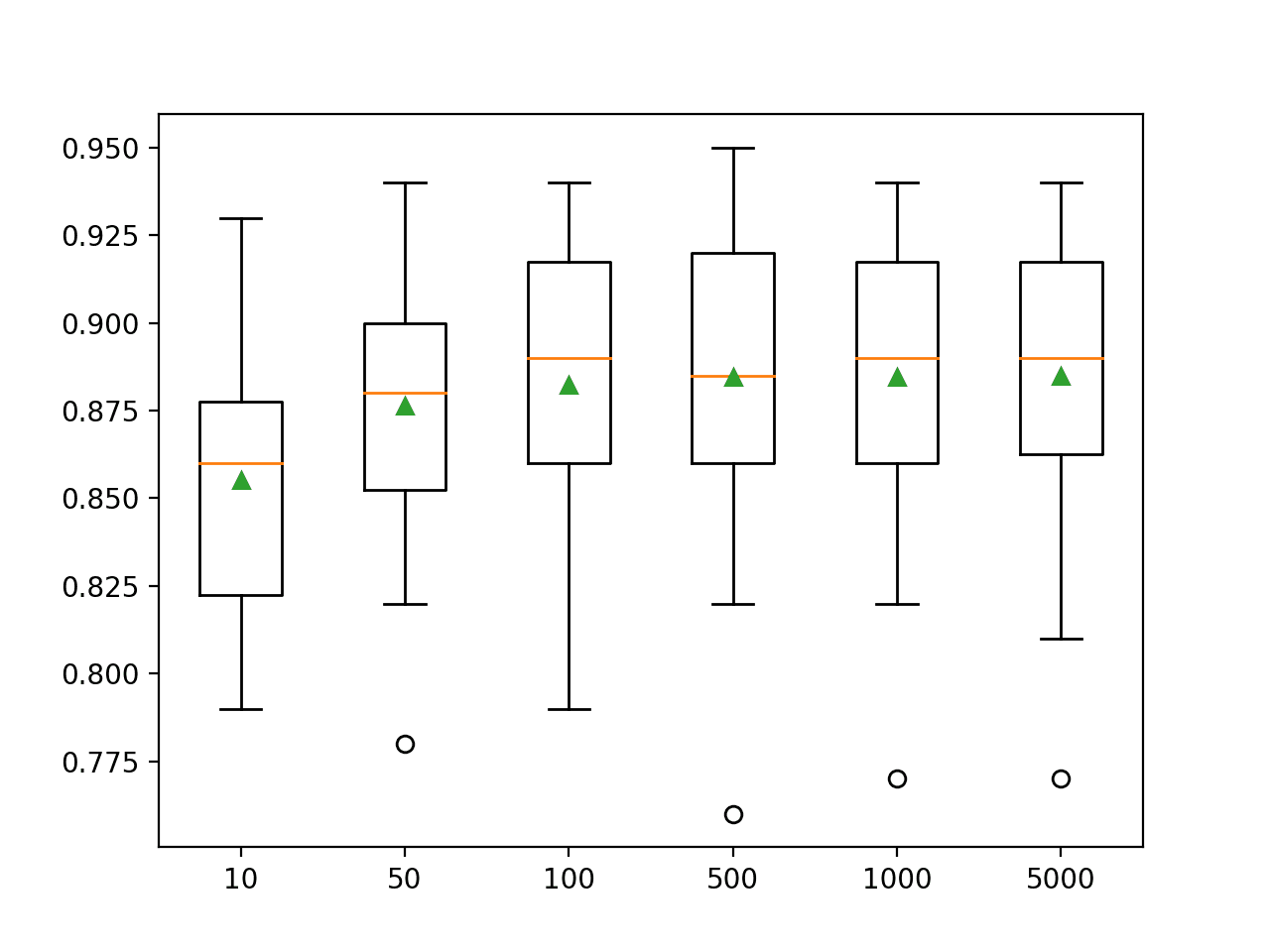

Take b bootstrapped samples from the original dataset. ML is one of the most exciting technologies that one. As Id Column will not be participating in any prediction.

It is done by building a model by using weak. Ensemble learning is a machine learning paradigm where multiple models often. Bagging Vs Boosting In Machine Learning Geeksforgeeks Ensemble machine learning can be mainly categorized into bagging and boosting.

Boosting builds multiple incremental models to decrease. Depending on the dataset requirement. A Computer Science portal for geeks.

Ensemblelearning ensemblemodels machinelearning dataanalytics. It contains well written well. Recall that a bootstrapped sample is a sample of the original dataset.

Boosting is a method of merging different types of predictions. Bootstrap aggregating also called bagging is one of the first ensemble algorithms 28 machine learning practitioners learn and is designed to improve the stability and accuracy of regression. Filling the empty slots with meanmode0NAetc.

Skip to content. It contains well written well thought and well explained computer science and programming articles quizzes and practicecompetitive. Bagging allows multiple similar models with high variance are averaged to decrease variance.

Bagging And Boosting In Machine Learning. Bagging decreases variance not bias and. Boosting in Machine Learning Boosting and AdaBoost.

There are many ways to ensemble models the widely known models are Bagging or Boosting. Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset. Bagging is a method of merging the same type of predictions.

Bagging Boosting And Stacking In Machine Learning Drops Of Ai

Bagging Bootstrap Aggregation Overview How It Works Advantages

Python Machine Learning Bootstrap Aggregation Bagging

Foundations Of Big Data Machine Learning And Artificial Intelligence And Explainable Artificial Intelligence Springerlink

Artificial Intelligence And Learning Algorithms Springerlink

Adaboost Classifier Algorithms Using Python Sklearn Tutorial Datacamp

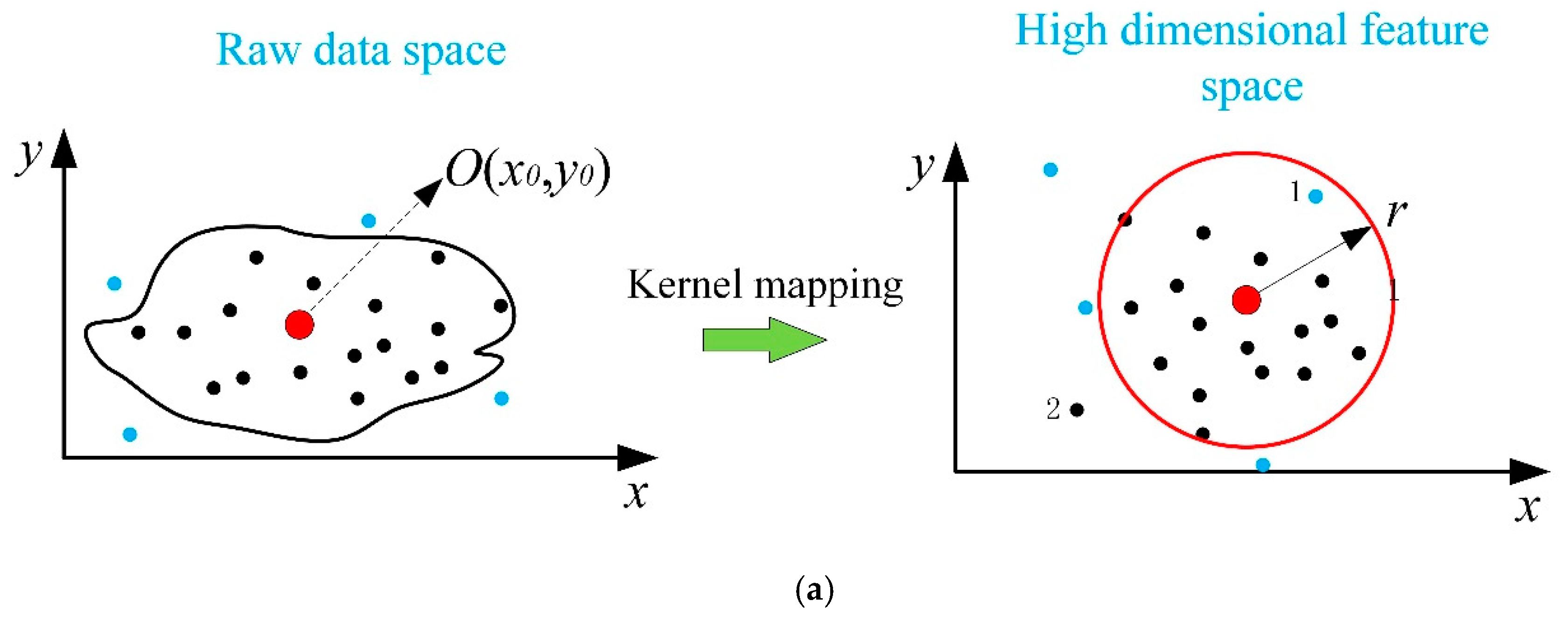

Entropy Free Full Text State Clustering Of The Hot Strip Rolling Process Via Kernel Entropy Component Analysis And Weighted Cosine Distance Html

How To Develop A Bagging Ensemble With Python

Hierarchical Clustering Agglomerative And Divisive Clustering

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Bootstrap Sampling Bootstrap Sampling In Machine Learning

Adaboost Classifier Algorithms Using Python Sklearn Tutorial Datacamp

What Is Bagging In Machine Learning And How To Perform Bagging

Bootstrap Aggregating Wikipedia

Ensemble Methods Bagging Boosting And Stacking By Joseph Rocca Towards Data Science

14 Essential Machine Learning Algorithms

A Gentle Introduction To Ensemble Learning Algorithms

Learning In Big Data Introduction To Machine Learning Sciencedirect